For our final project, I’m collaborating with Clemence Debaig and Romain Biros to create a series of interactive performances to explore the level of engagement of the audience when the level of participation and interactivity changes. This is still a work in progress; we’re still preparing for the performance itself.

We started by looking at different types of performer and audience interaction. We found it hard to find examples for performer-to-audience interaction and audience-to-audience interaction. Audience-to-audience interaction is an established mode, but it’s usually referred to as games rather than art. We then decided to create performances to interrogate this gap. Following Helen’s remarks from the last class, we will focus on the relationship between audience and performer.

One of the texts I referred to when developing this concept was “The Space of Electronic Time: The Memory Machines of Jim Campbell” by Marita Sturken. She writes:

“It is the visitor rather than the artist who performs the piece in an installation… An interactive work constructs a complex negotiation with its viewers, both anticipating their potential responses and allowing for their agency in some way.”

Our performance, “Mirror”, is one of the most complex performances I’ve ever been a part of. First, my entanglement with the scene (as prompted by last week’s discussion of material technoscience) is to inhabit many roles at once. I’m helping to develop the concepts and execution and programming the technology used. During the performance, I’ll be a performer, observer, and surveyor of audience reactions. Finally, after the performance is over, I’ll join my teammates in theorizing about it and writing a final text.

Spatially, the positions of the performers and audience members will shift, allowing for multilayered physical perspectives. For one performance, I’m developing a MaxMSP program to be displayed on laptops carried by the performers, who will move throughout the space. It feels strange to create something that’s seen by different people at different times. Every participant’s experience will be different.

I hope the performance goes smoothly. There are still a lot of moving parts. We will depend on the audience, as mentioned above, to perform the piece. They have to show up. We will try to direct their behavior, but we can’t do that completely. But that’s always a caveat when creating any interactive performance. We’ll see!

References

Sturken, Marita. “The Space of Electronic Time: The Memory Machines of Jim Campbell.” Space, Site, Intervention: Situating Installation Art. Ed. Erika Suderburg. 287-296. University of Minnesota Press, 2000.

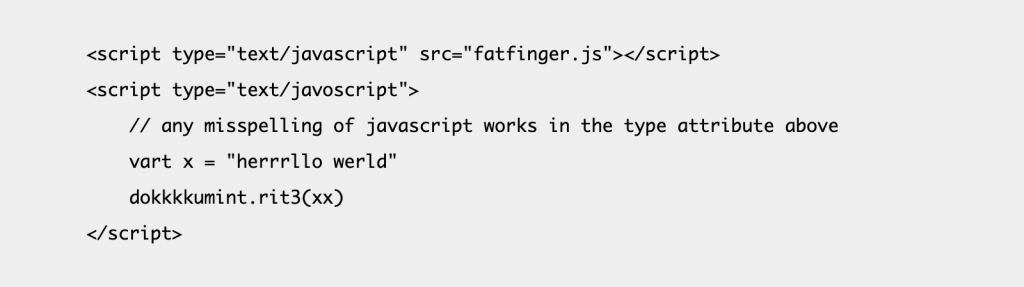

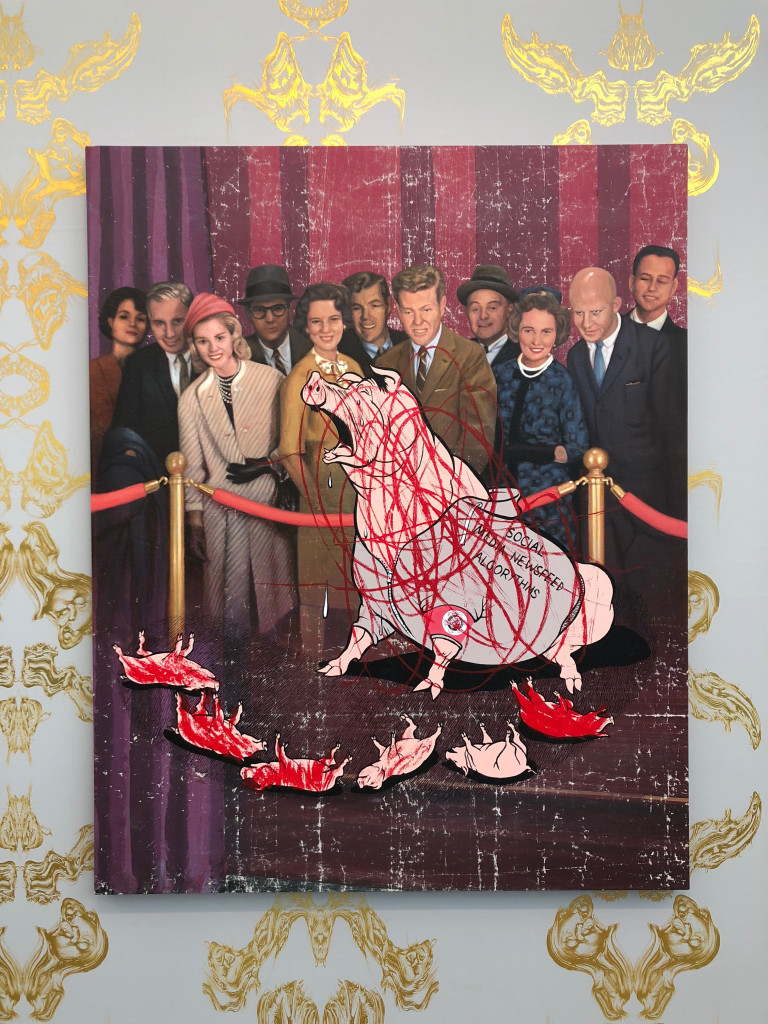

FatFinger by Daniel Temkin

FatFinger by Daniel Temkin

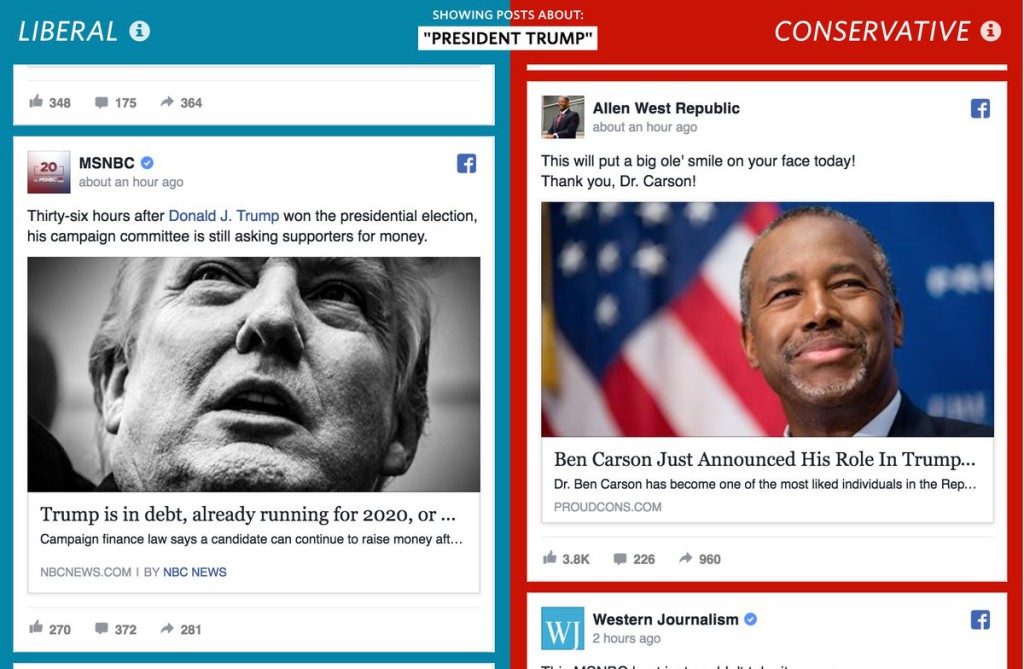

Blue Feed, Red Feed by Wall Street Journal

Blue Feed, Red Feed by Wall Street Journal